|

Concordia

|

|

Concordia

|

#include <tokenized_sentence.hpp>

Public Member Functions | |

| TokenizedSentence (std::string sentence) | |

| virtual | ~TokenizedSentence () |

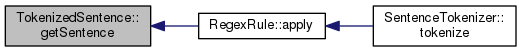

| std::string | getSentence () const |

| std::string | getOriginalSentence () const |

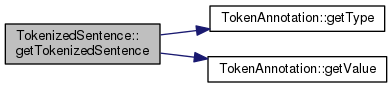

| std::string | getTokenizedSentence () const |

| std::list< TokenAnnotation > | getAnnotations () const |

| std::vector< INDEX_CHARACTER_TYPE > | getCodes () const |

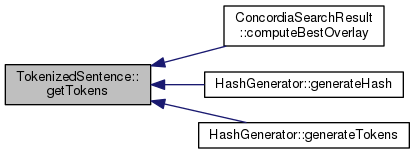

| std::vector< TokenAnnotation > | getTokens () const |

| void | generateHash (boost::shared_ptr< WordMap > wordMap) |

| void | generateTokens () |

| void | toLowerCase () |

| void | addAnnotations (std::vector< TokenAnnotation > annotations) |

A sentence after tokenizing operations. The class holds the current string represenation of the sentence along with the annotations list. The class also allows for generating hash. After that operation the class also holds the list of hashed codes and corresponding tokens.

|

explicit |

Constructor.

|

virtual |

Destructor.

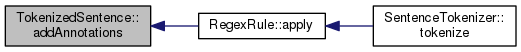

| void TokenizedSentence::addAnnotations | ( | std::vector< TokenAnnotation > | annotations | ) |

Add new annotations to the existing annotations list. Assumptions:

| annotations | list of annotations to be added |

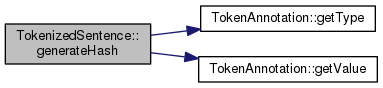

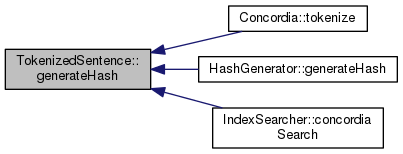

| void TokenizedSentence::generateHash | ( | boost::shared_ptr< WordMap > | wordMap | ) |

Method for generating hash based on annotations. This method takes into account annotations of type word and named entity. These are encoded and added to code list. Annotations corresponding to these tokens are added to the tokens list.

| wordMap | word map to use when encoding tokens |

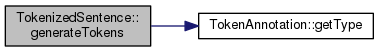

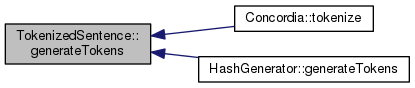

| void TokenizedSentence::generateTokens | ( | ) |

Method for generating tokens based on annotations. This method takes into account annotations of type word and named entity. Unlike in generateHash, these are not encoded or added to code list. Annotations corresponding to these tokens are added to the tokens list.

|

inline |

Getter for all annotations list. This method returns all annotations, including those which are not considered in the hash, i.e. stop words and html tags.

|

inline |

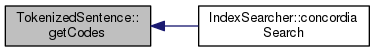

Getter for codes list. This data is available after calling the hashGenerator method.

|

inline |

Getter for the original string sentence, which was used for extracting tokens.

|

inline |

Getter for the string sentence, which might have been modified during tokenization.

| std::string TokenizedSentence::getTokenizedSentence | ( | ) | const |

Method for getting tokenized sentence in a string format ( tokens separated by single spaces.

|

inline |

Getter for tokens list. This method returns only those annotations considered in the hash, i.e. words and named entities.

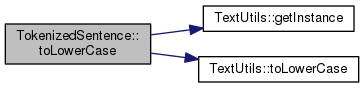

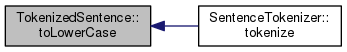

| void TokenizedSentence::toLowerCase | ( | ) |

Transform the sentence to lower case.