|

Concordia

|

|

Concordia

|

#include <concordia.hpp>

Public Member Functions | |

| Concordia () | |

| Concordia (const std::string &indexPath, const std::string &configFilePath) throw (ConcordiaException) | |

| virtual | ~Concordia () |

| std::string & | getVersion () |

| TokenizedSentence | tokenize (const std::string &sentence, bool byWhitespace=false, bool generateCodes=true) throw (ConcordiaException) |

| std::vector< TokenizedSentence > | tokenizeAll (const std::vector< std::string > &sentences, bool byWhitespace=false, bool generateCodes=true) throw (ConcordiaException) |

| TokenizedSentence | addExample (const Example &example) throw (ConcordiaException) |

| void | addTokenizedExample (const TokenizedSentence &tokenizedSentence, const SUFFIX_MARKER_TYPE id) throw (ConcordiaException) |

| void | addAllTokenizedExamples (const std::vector< TokenizedSentence > &tokenizedSentences, const std::vector< SUFFIX_MARKER_TYPE > &ids) throw (ConcordiaException) |

| std::vector< TokenizedSentence > | addAllExamples (const std::vector< Example > &examples) throw (ConcordiaException) |

| MatchedPatternFragment | simpleSearch (const std::string &pattern, bool byWhitespace=false) throw (ConcordiaException) |

| MatchedPatternFragment | lexiconSearch (const std::string &pattern, bool byWhitespace=false) throw (ConcordiaException) |

| std::vector< AnubisSearchResult > | anubisSearch (const std::string &pattern) throw (ConcordiaException) |

| boost::shared_ptr< ConcordiaSearchResult > | concordiaSearch (const std::string &pattern, bool byWhitespace=false) throw (ConcordiaException) |

| void | loadRAMIndexFromDisk () throw (ConcordiaException) |

| void | refreshSAfromRAM () throw (ConcordiaException) |

| void | clearIndex () throw (ConcordiaException) |

The Concordia class is the main access point to the library. This class holds references to three out of four main data structures used by Concordia: hashed index, markers array and suffix array. Word map is maintained by the class HashGenerator. Concordia has references to:

Whenever it is necessary, the data structures and tools held by Concordia are passed by smart pointers to methods which carry out specific functionalities.

| Concordia::Concordia | ( | ) |

Parameterless constructor

|

explicit | ||||||||||||||||||||

Constructor.

| indexPath | path to the index directory |

| configFilePath | path to the Concordia configuration file |

| ConcordiaException |

|

virtual |

Destructor.

| std::vector< TokenizedSentence > Concordia::addAllExamples | ( | const std::vector< Example > & | examples | ) | |

| throw | ( | ConcordiaException | |||

| ) | |||||

Adds multiple examples to the index.

| examples | vector of examples to be added |

| ConcordiaException |

| void Concordia::addAllTokenizedExamples | ( | const std::vector< TokenizedSentence > & | tokenizedSentences, |

| const std::vector< SUFFIX_MARKER_TYPE > & | ids | ||

| ) | |||

| throw | ( | ConcordiaException | |

| ) | |||

Adds multiple tokenized examples to the index.

| examples | vector of examples to be added |

| ids | vector of ids of the sentences to be added |

| ConcordiaException |

| TokenizedSentence Concordia::addExample | ( | const Example & | example | ) | |

| throw | ( | ConcordiaException | |||

| ) | |||||

Adds an Example to the index.

| example | example to be added |

| ConcordiaException |

| void Concordia::addTokenizedExample | ( | const TokenizedSentence & | tokenizedSentence, |

| const SUFFIX_MARKER_TYPE | id | ||

| ) | |||

| throw | ( | ConcordiaException | |

| ) | |||

Adds a tokenized example to the index.

| tokenizedSentence | tokenized sentence to be added |

| id | id of the sentence to be added |

| ConcordiaException |

| std::vector< AnubisSearchResult > Concordia::anubisSearch | ( | const std::string & | pattern | ) | |

| throw | ( | ConcordiaException | |||

| ) | |||||

| pattern | pattern to be searched in the index |

| ConcordiaException |

| void Concordia::clearIndex | ( | ) | ||

| throw | ( | ConcordiaException | ||

| ) | ||||

Clears all the examples from the index

| ConcordiaException |

| boost::shared_ptr< ConcordiaSearchResult > Concordia::concordiaSearch | ( | const std::string & | pattern, |

| bool | byWhitespace = false |

||

| ) | |||

| throw | ( | ConcordiaException | |

| ) | |||

Performs concordia lookup on the index. This is a unique library functionality, designed to facilitate Computer-Aided Translation. For more info see Concordia searching.

| pattern | pattern to be searched in the index |

| ConcordiaException |

| std::string & Concordia::getVersion | ( | ) |

Getter for version.

| MatchedPatternFragment Concordia::lexiconSearch | ( | const std::string & | pattern, |

| bool | byWhitespace = false |

||

| ) | |||

| throw | ( | ConcordiaException | |

| ) | |||

Performs a search useful for lexicons in the following scenario: Concordia gets fed by a lexicon (glossary) instead of a TM. The lexicon search performs as simple search - it requires the match to cover the whole pattern, but additionally the lexicon search requires that the match is the whole example source.

| pattern | pattern to be searched in the index |

| byWhitespace | whether to tokenize the pattern by white space |

| ConcordiaException |

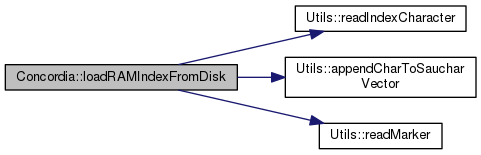

| void Concordia::loadRAMIndexFromDisk | ( | ) | ||

| throw | ( | ConcordiaException | ||

| ) | ||||

Loads HDD stored index files to RAM and generates suffix array based on RAM stored data structures. For more info see Concept of HDD and RAM index.

| ConcordiaException |

| void Concordia::refreshSAfromRAM | ( | ) | ||

| throw | ( | ConcordiaException | ||

| ) | ||||

Generates suffix array based on RAM stored data structures. For more info see Concept of HDD and RAM index.

| ConcordiaException |

| MatchedPatternFragment Concordia::simpleSearch | ( | const std::string & | pattern, |

| bool | byWhitespace = false |

||

| ) | |||

| throw | ( | ConcordiaException | |

| ) | |||

Performs a simple substring lookup on the index. For more info see Simple substring lookup.

| pattern | pattern to be searched in the index |

| byWhitespace | whether to tokenize the pattern by white space |

| ConcordiaException |

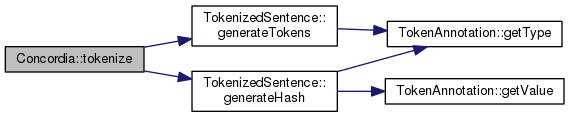

| TokenizedSentence Concordia::tokenize | ( | const std::string & | sentence, |

| bool | byWhitespace = false, |

||

| bool | generateCodes = true |

||

| ) | |||

| throw | ( | ConcordiaException | |

| ) | |||

Tokenizes the given sentence.

| sentence | sentence to be tokenized |

| byWhitespace | whether to tokenize the sentence by whitespace |

| generateCodes | whether to generate codes for tokens using WordMap |

| ConcordiaException |

| std::vector< TokenizedSentence > Concordia::tokenizeAll | ( | const std::vector< std::string > & | sentences, |

| bool | byWhitespace = false, |

||

| bool | generateCodes = true |

||

| ) | |||

| throw | ( | ConcordiaException | |

| ) | |||

Tokenizes all the given sentences.

| sentences | vector of sentences to be tokenized |

| byWhitespace | whether to tokenize the sentence by whitespace |

| generateCodes | whether to generate codes for tokens using WordMap |

| ConcordiaException |