|

Concordia

|

|

Concordia

|

#include <sentence_tokenizer.hpp>

Public Member Functions | |

| SentenceTokenizer (boost::shared_ptr< ConcordiaConfig > config) throw (ConcordiaException) | |

| virtual | ~SentenceTokenizer () |

| TokenizedSentence | tokenize (const std::string &sentence, bool byWhitespace=false) |

Class for tokenizing sentence before generating hash. Tokenizer ignores unnecessary symbols, html tags and possibly stop words (if the option is enabled) in sentences added to index as well as annotates named entities. All these have to be listed in files (see Concordia configuration).

|

explicit | ||||||||||||||

Constructor.

| config | config object, holding paths to necessary files |

|

virtual |

Destructor.

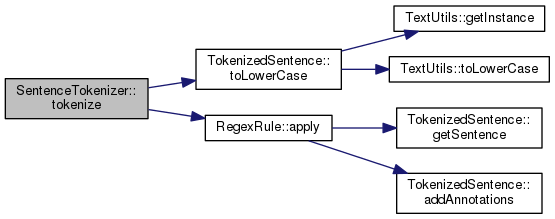

| TokenizedSentence SentenceTokenizer::tokenize | ( | const std::string & | sentence, |

| bool | byWhitespace = false |

||

| ) |

Tokenizes the sentence.

| sentence | input sentence |

| byWhitespace | whether to tokenize the sentence by whitespace |